티스토리 뷰

AWS Autogluon

AWS Autogluon is a machine learning (ML) framework that automates the process of developing highly accurate ML models. Autogluon is designed to simplify the complex process of model selection and hyperparameter tuning, which can be time-consuming and require significant expertise.

Autogluon is built on top of Apache MXNet, a popular deep learning framework, and offers an easy-to-use interface for training and deploying models. It supports a wide range of ML tasks, including image classification, object detection, natural language processing, and time series forecasting.

One of the key features of Autogluon is its automatic model selection and hyperparameter tuning. The framework uses advanced algorithms to search for the best model architecture and hyperparameters, while also taking into account factors such as dataset size, task complexity, and available compute resources.

Overall, AWS Autogluon offers a powerful and efficient way to develop high-quality ML models, even for users with limited ML expertise

Install Python AWS Autogluon

To install AWS Autogluon in Python, you can use pip, which is the default package manager for Python. Here are the steps:

1. Open a command prompt or terminal window.

2. Type the following command to install AWS Autogluon:

pip install autogluon3. Press Enter to execute the command.

4. Wait for the installation to complete.

That's it! Once the installation is complete, you can start using AWS Autogluon in your Python projects by importing the autogluon module as shown in the previous answer.

Import python AWS Autogluon Lib

To import the Autogluon library in Python, you can use the following code:

import autogluon.core as agThis will import the Autogluon library and its core components, which include the TabularPrediction module for tabular data, TextPrediction module for text data, ImagePrediction module for image data, and Task module for multi-modal data.

You can also import specific modules from Autogluon by using the from keyword. For example, to import only the TabularPrediction module, you can use the following code:

from autogluon.tabular import TabularPrediction as taskThis will import only the TabularPrediction module and rename it to task for convenience.

load the training data

TabularDataset('https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv') loads a tabular dataset in Autogluon from a URL. If you want to load the same dataset using pandas, you can use the read_csv() function. Here's the equivalent code using pandas:

train_data = TabularDataset('https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv')

subsample_size = 500

train_data = train_data.sample(n=subsample_size, random_state=0)

train_data.head()Setting the Y label

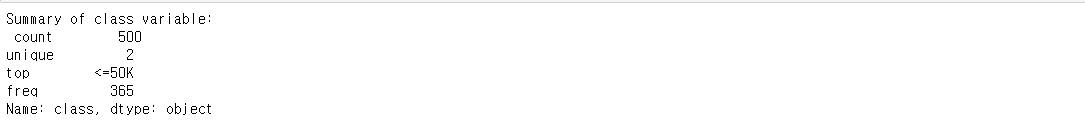

label = 'class'

print("Summary of class variable: \n", train_data[label].describe())

Specify the location to save the model and train the model on data (training data)

# specify the location to save the trained model

save_path = 'agModels-predictClass' # specifies folder to store trained models

# train the model on the training data

predictor = TabularPredictor(label=label, path=save_path).fit(train_data)or

import autogluon.core as ag

# specify the location to save the trained model

save_path = 'agModels-predictClass'

# load the training data

train_data = ag.TabularDataset('https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv')

# specify the name of the target variable

label = 'class'

# train the model on the training data

predictor = ag.tabular.TabularPredictor(label=label).fit(train_data)

# save the trained model to the specified location

predictor.save(path=save_path)

In this example, the trained model is saved to the models/ directory. The training data is loaded from a URL using the TabularDataset() function, and the name of the target variable is specified as class. The TabularPredictor() class is used to train the model, and the fit() method is used to fit the model to the training data. Finally, the trained model is saved to the specified location using the save() method.

Preprocess the test data

"Remove the target variable for prediction"

When making predictions with a machine learning model, you typically need to remove the target variable (also known as the dependent variable or response variable) from the data that you are making predictions on. This is because the target variable is what you are trying to predict, so it cannot be included as a feature in the prediction process.

Here's an example of how you can remove the target variable from a dataset using Python and pandas:

y_test = test_data[label] # values to predict

test_data_nolab = test_data.drop(columns=[label]) # delete label column to prove we're not cheating

test_data_nolab.head()

Verify the prediction results

Specify the location to save the prediction results -> Run the prediction -> Examine the prediction results -> Evaluate the model

predictor = TabularPredictor.load(save_path) # unnecessary, just demonstrates how to load previously-trained predictor from file

y_pred = predictor.predict(test_data_nolab)

print("Predictions: \n", y_pred)

Evaluate the model

perf = predictor.evaluate_predictions(y_true=y_test, y_pred

check the accuracy of each mode

predictor.leaderboard(test_data, silent=True)

'Dev Story > AWS' 카테고리의 다른 글

| AWS CDK Lambda & Athena (0) | 2023.04.16 |

|---|---|

| 쉽고 빠르게 AWS Lambda로 파일 압축 및 해제하기: tar 압축과 arcname 옵션 활용! (0) | 2023.04.14 |

| AWS CodeWhisperer를 활용한 코드 작성 (0) | 2023.03.04 |

| AWS EC2 EBS 볼륨 증설하기 (용량 늘리기) (0) | 2023.03.03 |

| AWS AutoGluon 샘플( Tabular Prediction( 소득 예측 ) ) (0) | 2022.04.26 |

- Total

- Today

- Yesterday

- DevOps

- 따라해보기

- 티스토리

- GPT

- docker

- AWS

- 쉽게따라하기

- Containerization

- MongoDB

- ML

- 개발이야기

- python

- Redis

- 클라우드

- 실습

- EC2

- frontend

- lambda

- svelte

- typescript

- ChartGPT

- AI

- ubuntu

- svelte 따라해보기

- 따라하기

- 한식

- Docker 관리

- nestjs

- 딥러닝

- cloudcomputing

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 |